Securing Your Terraform Deployment On AWS Via Gitlab-Ci And Vault – Part 3

How do you secure your Terraform deployment on AWS using Gitlab-CI and the Vault? In previous articles, we’ve looked at the problems of CI/CD deployments on the cloud, and then at how to solve these problems by using Vault to generate dynamic secrets and authenticate the Gitlab-CI pipeline. In this third and final article, we will discuss application-side secret recovery.

The challenge on the application side

We have seen in the previous section how to solve the various problems encountered in the pipeline. However, our CI with Gitlab is not limited to the deployment of the infrastructure, but also includes the application itself.

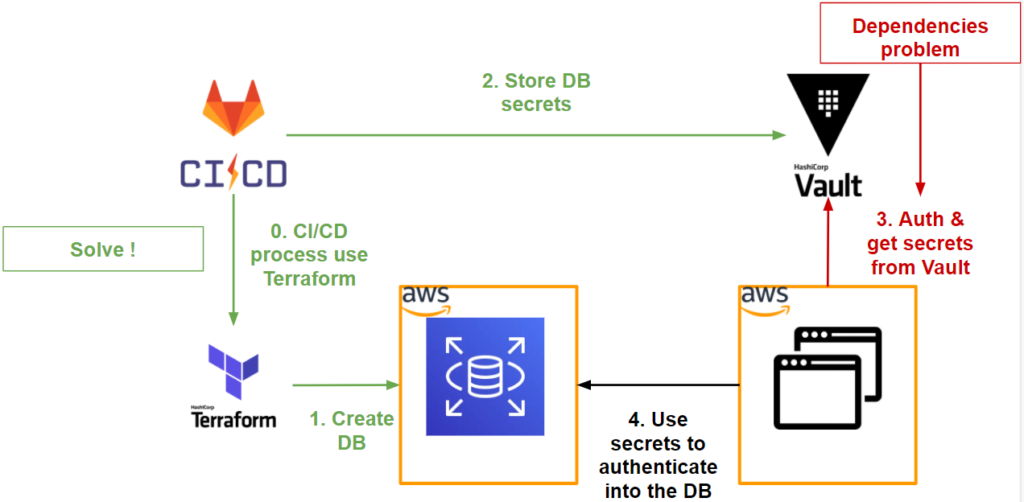

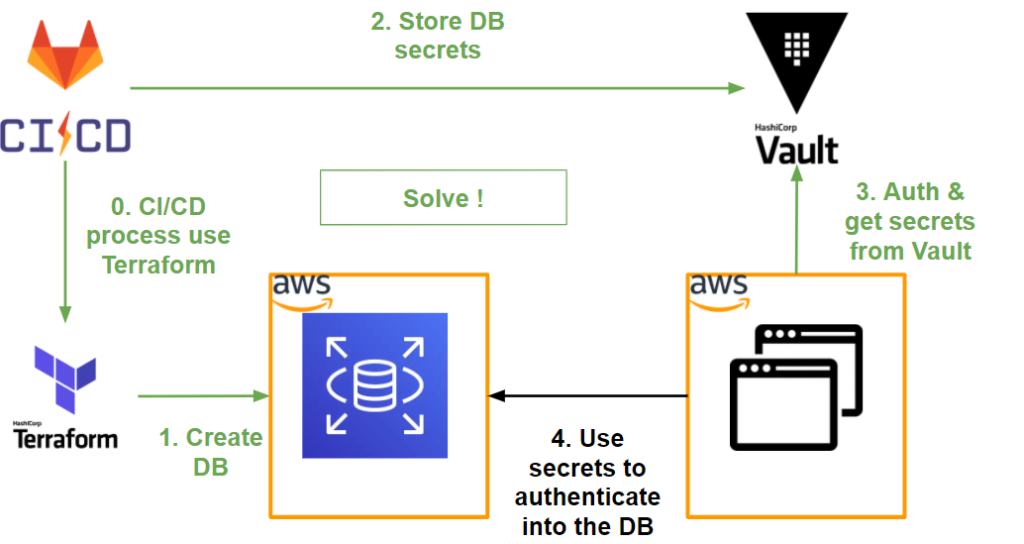

If we summarise what we have managed to do so far at the workflow level, we have the following diagram:

As we can see, our application needs to retrieve the secrets stored in Vault in order to connect to the database.

However, we want the interaction with the application and Vault to be as seamless as possible and to do this we need to reduce dependencies:

- About the authentication: what method should we choose with our application to make it as transparent and secure as possible?

- About the use of secrets: how to recover a dynamic secret (short TTL) without impacting the application code?

Application Authentification

When it comes to authenticating our application with Vault, if we want it to be as seamless and secure as possible, it is important to base this on the environment in which our application is deployed.

Our application is deployed on AWS, which is perfect because on the Vault side we have an AWS-style authentication method.

This authentication method has two types: IAM and EC2.

As our application is deployed on an EC2 instance, we will use the EC2 type.

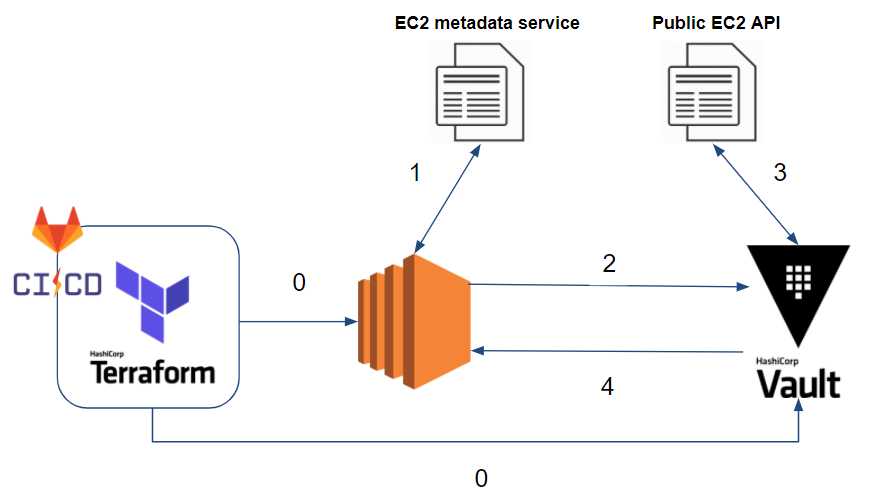

If we look closely at how this method works, we have the following scenario:

- 0 – So far our Gitlab-CI, through Terraform, has deployed our application to an EC2 instance and stored the database secrets in Vault.

- 1 – Our EC2 instance, once deployed, gets its metadata through the EC2 metadata service (e.g. Instance ID, subnet and VPC ID where our EC2 instance is deployed, etc). You can find more details on the official AWS documentation.

- 2 – Our application authenticates to Vault through the AWS EC2 method using a PKCS7 signature.

- 3 – Vault verifies the identity and that the EC2 instance hosting our application meets our authentication requirements (bound parameters) (e.g. Is it in the right VPC and subnet? Is it the right instance ID? etc)

- 4 – If the authentication is successful, Vault returns a token.

To implement this authentication method, you need to :

- Vault must be able to verify the identity and metadata of the EC2 instance on the target AWS account.

Specify the authentication requirements (bound parameters) to Vault that we want to allow the application to authenticate against.

Identity and metadata verification

In order for Vault to be able to check the information in our EC2 instance, it needs rights to the target account to describe the instance via the following action: ec2:DescribeInstances.

To do this, and to follow the same logic as in our previous demonstrations, we’ll create an IAM role for Vault to assume.

The IAM role should contain the following policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ec2:DescribeInstances"

],

"Resource": "*"

}

]

}

And the Trust Relationships authorising the source account (where Vault is located) to assume the IAM role. On the Vault configuration side, we configure it through Terraform:

resource "vault_auth_backend" "aws" {

description = "Auth backend to auth project in AWS env"

type = "aws"

path = "${var.project_name}-aws"

}

resource "vault_aws_auth_backend_sts_role" "role" {

backend = vault_auth_backend.aws.path

account_id = split(":", var.vault_aws_assume_role)[4]

sts_role = var.vault_aws_assume_role

}

As we can see, we also specify the IAM role (STS) that Vault should assume.

At this point, Vault is able to verify the information in our EC2 instance.

Set the authentication constraints (Bound parameters)

In terms of security, under what conditions do we allow our application to authenticate to the Vault to retrieve its secrets?

If we take a closer look at the Vault’s EC2-like AWS authentication method, we can base it on several criteria, such as: AMI ID, account ID, region, VPC ID, subnet ID, ARN of the IAM role, ARN of the profile instance or EC2 instance ID.

We can specify multiple criteria and multiple values for each criterion. For authentication to be accepted by Vault, a value for each criterion must be met.

In our case, our EC2 instance is deployed by Terraform. This is ideal, as through Terraform we are able to retrieve all the attributes of our instance and specify them on the Vault side as bound parameters.This gives us the following Terraform snippet to create the Vault role for our AWS authentication backend:

resource "vault_aws_auth_backend_role" "web" {

backend = local.aws_backend

role = var.project_name

auth_type = "ec2"

bound_ami_ids = [data.aws_ami.amazon-linux-2.id]

bound_account_ids = [data.aws_caller_identity.current.account_id]

bound_vpc_ids = [data.aws_vpc.default.id]

bound_subnet_ids = [aws_instance.web.subnet_id]

bound_region = var.region

token_ttl = var.project_token_ttl

token_max_ttl = var.project_token_max_ttl

token_policies = ["default", var.project_name]

}

In our case, we rely on the AMI ID, VPC ID, subnet ID and AWS region. We could have added the instance ID to strengthen the security of our authentication but this criterion should be avoided in an Auto Scaling Group.

At this point our application is able to authenticate and retrieve its secrets from the Vault.

Using the secrets of our application

On the Vault integration side of our application, we will be using the Vault agent.

For those of you who would like more details on the Vault agent integration, you can refer to the following article.

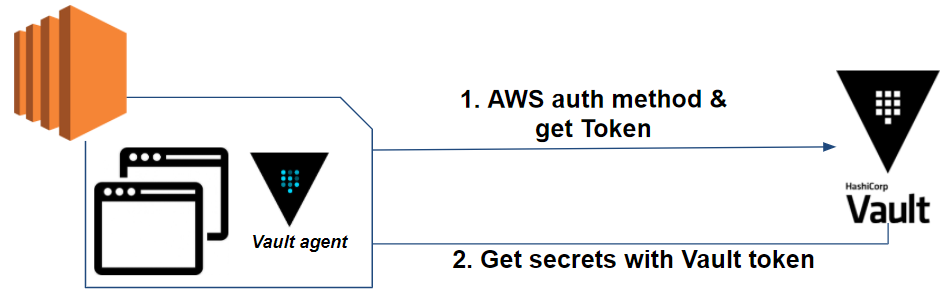

Let’s take a closer look at our application’s workflow with Vault:

As we can see, the Vault agent handles two phases:

- AWS authentication with Vault and rotation of the Vault token

- Secret retrieval and their refreshment

In the following Vault agent configuration we have:

auto_auth {

method {

mount_path = "auth/${vault_auth_path}"

type = "aws"

config = {

type = "ec2"

role = "web"

}

}

sink {

type = "file"

config = {

path = "/home/ec2-user/.vault-token"

}

}

}

template {

source = "/var/www/secrets.tpl"

destination = "/var/www/secrets.json"

}

This file can be templatized by Terraform to override certain values such as the Vault role name or the mount_path. And finally, on the secret template side, we want to get the secrets in JSON format, which gives us the following format:

{

{{ with secret "web-db/creds/web" }}

"username":"{{ .Data.username }}",

"password":"{{ .Data.password }}",

"db_host":"${db_host}",

"db_name":"${db_name}"

{{ end }}

}

Vault takes care of all the Vault related stuff (Vault token, secrets, refresh, etc) leaving our application to just retrieve its secrets from the relevant file:

if (file_exists("/var/www/secrets.json")) {

$secrets_json = file_get_contents("/var/www/secrets.json", "r");

$user = json_decode($secrets_json)->{'username'};

$pass = json_decode($secrets_json)->{'password'};

$host = json_decode($secrets_json)->{'db_host'};

$dbname = json_decode($secrets_json)->{'db_name'};

}

else{

echo "Secrets not found.";

exit;

}

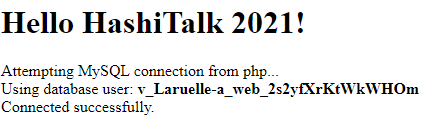

This gives us the expected result:

Things to remember

Compared to what we have managed to do so far, we have the following workflow:

- Terraform has a Vault provider to simplify the interaction between the two tools.

- It is possible to authenticate a pipeline or a specific branch of a Gitlab-CI job with Vault via the JWT authentication method.

- Vault allows cloud provider credentials to be generated for deploying IaC via Terraform. For AWS, it is able to assume IAM roles on multiple AWS accounts allowing our Terraform to deploy IaC on multiple AWS accounts.

- Vault allows for the centralization of multiple types of secrets for a project in an environment-agnostic manner, including secrets generated by the IaC.

- Vault agent simplifies the integration of Vault into an application by handling authentication and the lifecycle of secrets

- The Vault token used by the Vault agent is short-lived and changes frequently.

- The application secrets (database in our example) have a short lifespan and are often updated via the Vault agent.

- We allow our application to authenticate to the Vault depending on the environment it is in. For our application in AWS, we rely on AWS EC2 authentication and bound parameters such as the subnet ID where our application is located, the VPC ID, the AWS region, etc.

As we have seen in this article, Vault allows us to secure our Terraform through Gitlab-CI from end to end including the IaC or our application itself.

Also, Vault agent allows us to reduce application dependencies with Vault.

Used in the right way, this integration can be transparent to ops, dev and applications. Secrets become transparent to all and with a short life cycle.Why seek to know a secret if we can have transparent Secret as a Service?