Securing Your Terraform Deployment On AWS Via Gitlab-Ci And Vault – Part 1

In previous articles we have seen how to use the Hashicorp Vault tool to centralise static and dynamic secrets and for Encryption as a Service.

In this series of articles, we will go further and see how to secure your Terraform deployment on AWS using Gitlab-CI and the Vault tool. This first article will be dedicated to exposing the issue of CI/CD deployments on the Cloud.

Prerequisites

Note: At the time of writing, Vault is in version 1.6.3.

Another clarification: We rely on the free version of gitlab.com and Gitlab Community Edition (CE).

Prerequisites :

- Vault: basic mechanics such as authentication and secret types.

- AWS: IAM (role, assume role, etc) and EC2 (metadata, instance profile, etc) will be covered but do not require an advanced level. It is however preferable to have a knowledge base on AWS.

- Gitlab-CI: the basics on the CI part of Gitlab (gitlab-ci.yml, pipeline, etc).

- Terraform: the basics will be sufficient.

Finally, this article follows the article Reducing code dependency with Vault Agent.

The challenge of our continuous integration

CI/CD is commonly used in Infrastructure as Code (IaC) and application deployments on Cloud platforms. These platforms offer deployment flexibility through their APIs.

However, how do we provide access to our CI in these environments in a secure manner?

In this article we will focus, on one hand, on Gitlab-CI for our CI and Terraform for the IaC, in order to be environment agnostic.

On the other hand, AWS is taken as an example, but we can apply this logic to any other cloud provider.

A CI external to AWS, a complexity factor

AWS offers a good integration between its services especially when we stay in its ecosystem.

It is however more complicated to use a CI external to AWS, Gitlab-CI in our case, which needs to use AWS to deploy/configure services.

Let’s take the following scenario as an example: we have an application on an EC2 instance containing a web server that uses a MySQL database on RDS. We want to deploy this infrastructure as an IaC with Terraform using Gitlab-CI.

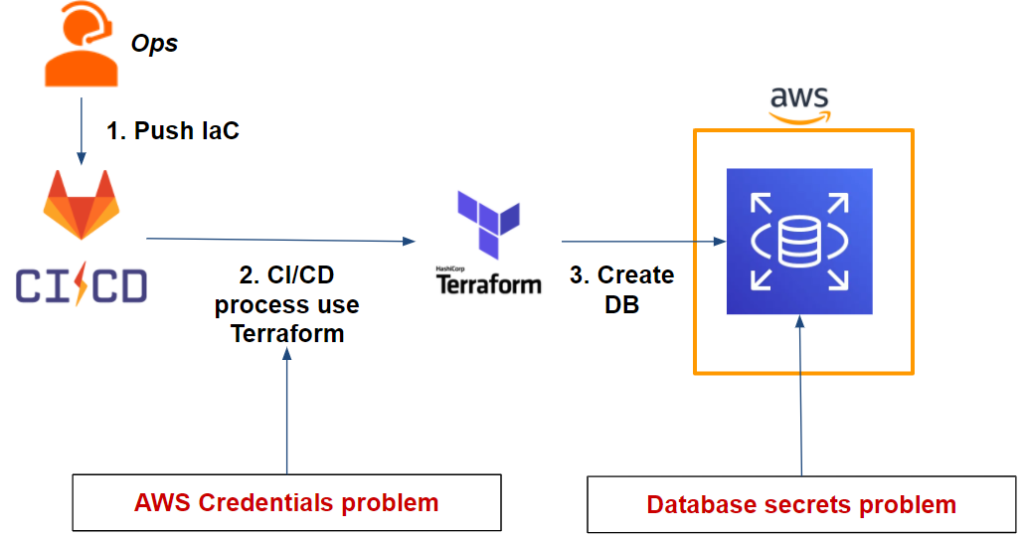

This gives us the following workflow:

As we can see from our workflow, we have two issues:

- Our Gitlab-CI pipeline: Terraform needs AWS credentials to be able to deploy the RDS database on AWS.

The database: We need to securely store the admin user credentials that will be generated once the database is deployed.

Trying to solve the challenge by thinking AWS

Let’s focus on our first issue regarding our Gitlab-CI pipeline and our AWS credentials. How can we solve this problem?

First attempt: the IAM user and environment variables

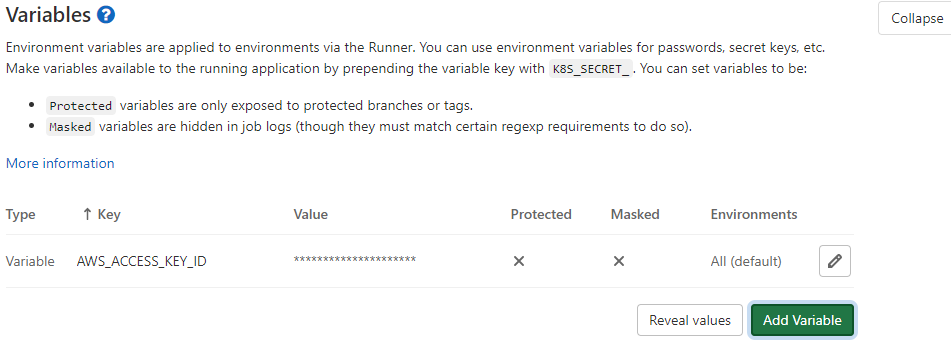

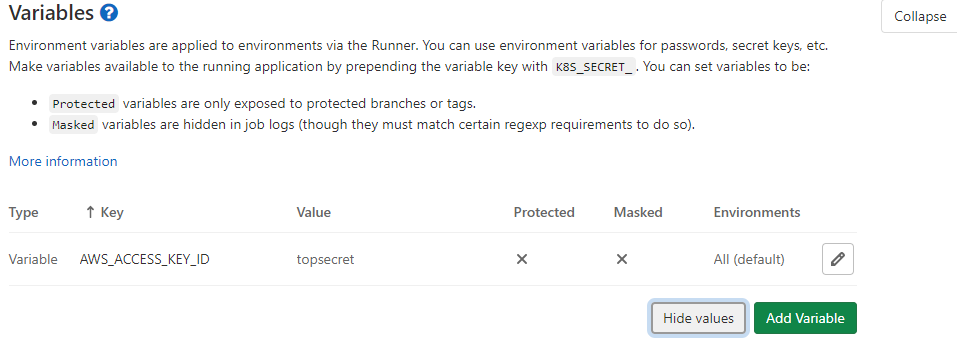

The easiest and quickest way to solve this problem is to create an IAM user and generate a couple of ACCESS KEY and SECRET KEY that we will put in the environment variable of our project.

In this way, our Terraform will be able to deploy the application infrastructure on AWS and in particular our RDS. This is possible because Terraform is able to retrieve AWS credentials via environment variables.

Indeed, this method is the simplest and quickest way to solve the problem mentioned above, but it raises several questions:

- The credentials are static, so a rotation system must be set up. As a corollary, the system will need to have access between Gitlab-CI and AWS to do this rotation, which leads to other issues around access/credentials

- It is difficult to have and set up credentials for each environment and isolate by GIT branch (e.g. master, dev, features/*)

- A person with at least Maintainer or Owner permissions for a project is able to see/modify CI/CD variables. Thus, some users with privileged rights on Gitlab but not necessarily legitimate to obtain these application or CI secrets will be able to retrieve them or even modify them. Taking the idea further, a user who is able to retrieve a secret without the constraint of verifying its source (e.g. filtering on the source IP, etc.) will be able to use it outside the CI. This makes it impossible to identify the actual user of the secret or even its legitimacy.

This situation can quickly lead to a loss of control over access and use of the secret:

Second attempt: use of an IAM role and/or AWS profile instance

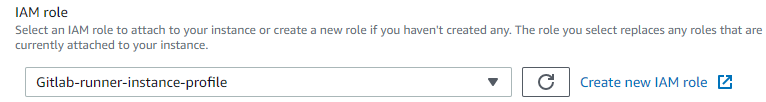

A second option is possible if your Gitlab Runner is on AWS.

Based on our first assumption, we can delete our IAM user and his credentials on the variable Gitlab CI/CD side and create an IAM role.

The goal is for our Gitlab Runner to assume the IAM role to benefit from temporary credentials.

If our Gitlab Runner is on an EC2 instance, we just need to put a profile instance:

If it is on an AWS ECS (Elastic Container Service), we will have to assign an IAM role to our container.

It is also possible to do the same thing with EKS (Elastic Kubernetes Service).

The issues raised in the previous attempt are resolved, however:

- Credentials apply at the Gitlab Runner level. This means that all Gitlab-CI projects that have access to this container/Gitlab Runner will have the same IAM privileges. However, we want to have least privilege for each project. Even if we want to have one Gitlab Runner per project, it is difficult to isolate the Gitlab Runner privileges for each environment or per GIT branch (e.g. master, dev, features/*)

- This solution is only usable if we take into consideration the possibility to deploy our Gitlab Runner on AWS (which is not the case for all).

As we can see from this second test, the real challenge is not to give our Gitlab Runner or our project access to AWS but rather to our pipeline/Gitlab-CI for a specific environment with a least privilege mechanic (especially in a cloud agnostic perspective).

Furthermore, we have not yet addressed the issue of database secrets. So in the next article we’ll look at this question: how we can allow a Gitlab-CI job to use and store secrets (spoiler: by using Vault).