How to calculate the carbon footprint of training/running a large AI model in the cloud

GPT-3, GPT-4, ChatGPT, Bard, Stable Diffusion, Midjourney, DALL-E, etc., are Generative AI systems, made by large AI models. Systems that can generate text, images, or other media in response to prompts. A large amount of data and computing resources are necessary to train a large model like that.

However, the question is: how big are these models’ footprints? “A main problem to tackle in reducing AI’s climate impact is to quantify its energy consumption and carbon emission and to make this information transparent.” (Dhar, 2020).

Carbon footprint

The carbon footprint measures the greenhouse gases emitted by an activity, person or country (Gouvernement.fr, 2016). In our context, here will be the activity of training a large AI model. It’s relevant to highlight that the emission in the experimentation phase and the inferences executions are not necessarily included in the figures that will be presented further.

Large AI model

Before speaking about a large AI model, it’s essential to understand what’s AI. In simple terms, AI is a science field that ‘studies’ (i.e., develops theories, models & experiments) ‘intelligence’ demonstrated by machines (that’s why it is called ‘artificial’). (Wikipedia.org [1], N.A.).

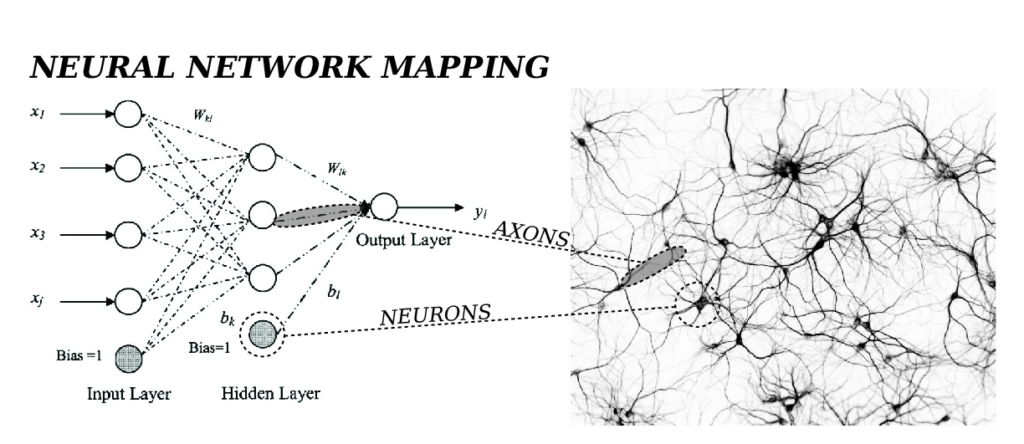

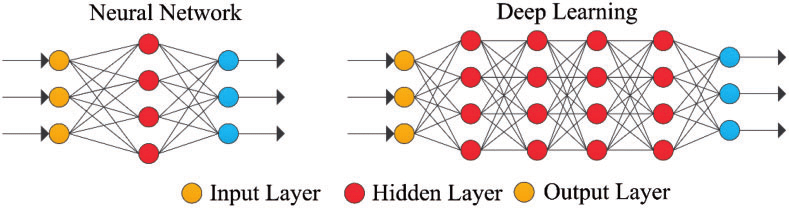

Inside the AI field, we have Machine Learning (ML), that’s a study area “responsible for understanding and building methods that let machines ‘learn’” (Wikipedia.org [2], N.A.). Then, inside ML, we have the Deep Learning (DL) area, which uses Artificial Neural Networks (ANN), which are methods inspired by how information processing is made in biological systems (for example, our brain). Figure 1 shows the relationship between the ANN and our Brain’s Neural Network.

Figure 1 – (Image credit to RPI Cloud Assembly).

However, if we want to mimic our Brain’s Neural Network architecture (and increase the quality of the results), it’s necessary to add more and more ‘neurons’ to the ANN (the circles we can see in Figures 1 and 2). In that way, we move from ANN to Deep Learning (DL), and these model architectures (DL) are also called large models (a Neural Network model with many hidden layers with many neurons).

Figure 2 – Neural Network x Deep Learning Network (Xing & Du, 2018).

Green AI x Red AI

When discussing AI research, it’s possible to split it into two mainstreams: Red AI and Green AI. The Red AI is the reference of any research that focuses mainly on increasing the accuracy of the AI model, thanks to the usage of massive calculation power.

The Green AI reference, for another hand, is all the research that considers carbon emission, electricity consumption, execution time, number of parameters and the number of floating point operations (FPO).

Calculation of the Large AI model footprint using Cloud Resources

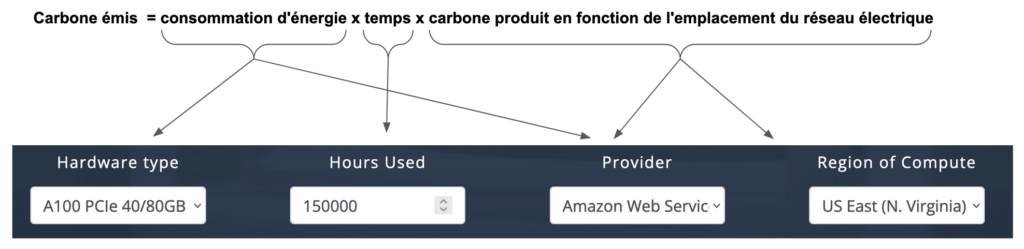

To calculate the footprint of a large AI model in the cloud, it’s necessary to know (at least) the hardware (mainly the GPU), the time spent to train the model, the cloud provider & the geographic region. Figure 3 below shows the equation.

Figure 3 – (Image credit to Mlco2.github.io).

The footprint from the Stable Diffusion model

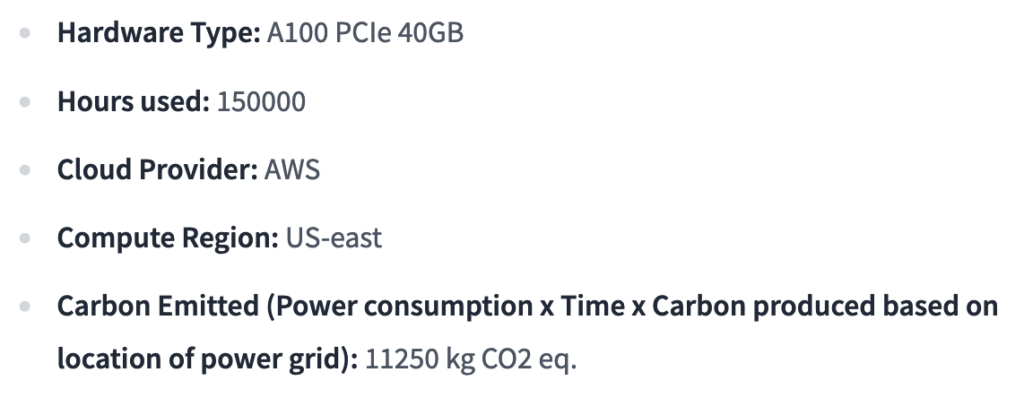

The Stable Diffusion model “is a text-to-image model capable of generating photo-realistic images given any text input” (Huggingface.co, N.A.). The team that trained this model calculated what they called the ‘Environmental Impact’, which is shown in Figure 4.

Figure 4 – (Image credit to Huggingface.co).

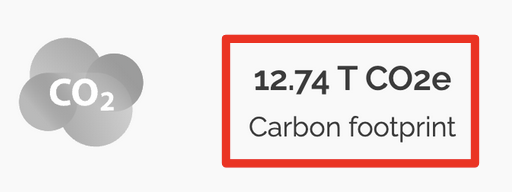

If we get this information (Hardware, time, cloud provider & compute region) and recalculate the ‘Carbom Emitted’ using mlco2.github.io and green-algorithms.org, we have the results from Figures 5 and 6. Overall, looks like the magnitude of the results is in line, and we are talking about 13 tons of CO2.

Figure 5 – Result from mlco2.github.io.

Figure 6 – Result from green-algorithms.org.

The 13 tons of CO2 emission are equivalent to:

- 1-year electrical consumption of 9 average houses in France;

- Almost 6 flights from Melbourne (Australia) to New York (USA);

- Something around drives 65000 kilometres of an average thermic car;

(Chat)GPT: what’s the footprint?

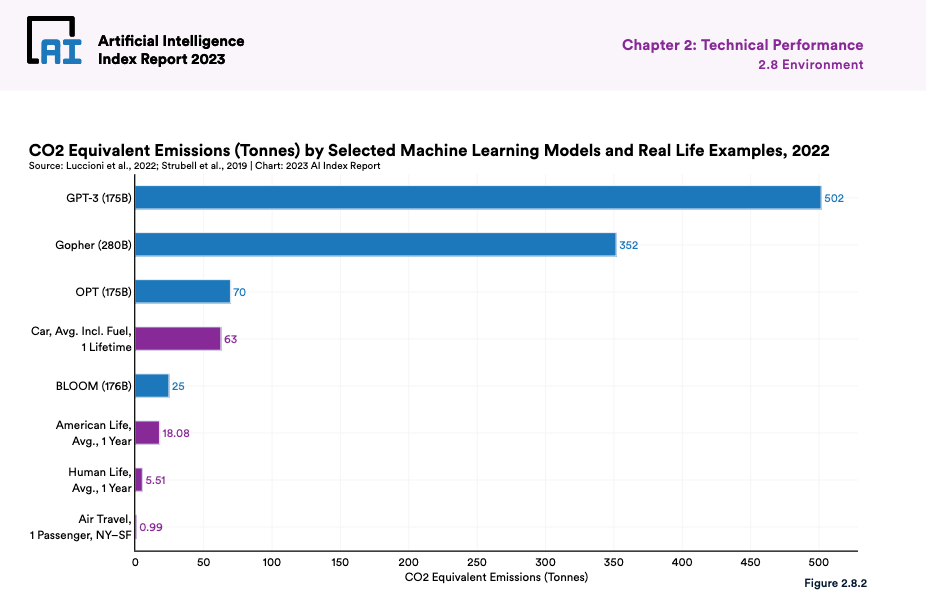

The 2023 Artificial Intelligence Report by Stanford University reveals that the GPT-3 model (used by the famous ChatGPT) produced the equivalent of 500 tons of CO2, as shown in Figure 7.

Figure 7 – CO2 Equivalent Emission by some ML models and Real Life examples (Aii.stanford.edu, 2023).

Something to reflect

« … There is a world of difference between what computers can do and what society will choose to do with them.«

Seymour Papert, Mindstorms

References

- Aii.stanford.edu (2023). ‘AI Index Report – Chapter 2 – Environmental Impact of Select Large Language Models’ (Accessed: 21 March 2023).

- Dhar, P. (2020). ‘The carbon impact of artificial intelligence’ (Accessed: 21 March 2023).

- Gouvernement.fr (2016). ‘Indicateur Empreinte Carbone’ (Accessed: 21 March 2023).

- Green-algorithms.org, Not Available. ‘Calculator’ (Accessed: 21 March 2023).

- Huggingface.co, Not Available. ‘Stable Diffusion v1-5’ (Accessed: 21 March 2023).

- Mlco2.github.io, Not Available. ‘Machine Learning CO2 Impact’ (Accessed: 21 March 2023).

- RPI Cloud Assembly, Not Available. ‘Neural Network Mapping: Analysis from Above’(Accessed: 21 March 2023).

- Xing, W., Du, D. (2018). ‘Dropout Prediction in MOOCs: Using Deep Learning for Personalized Intervention’ (Accessed: 21 March 2023).

- Wikipedia.org, Not Available. ‘Artificial Intelligence’ (Accessed: 21 March 2023).

- Wikipedia.org, Not Available. ‘Machine Learning’ (Accessed: 21 March 2023).